Disk speed is an important part of measuring a server's performance. AWS has many different types of EBS volumes and uses a burst repository model, equivalent to T2 instances, to set the overall speed of your disk.

Most server workloads probably include some form of memory caching, so if you have enough RAM, the speed of your disk may not matter much; once a file is read, can stay in memory for a while. But for write-intensive workloads, disk speed starts to become the limiting factor and can make or break your server performance.

IOPS and SSD performance explained

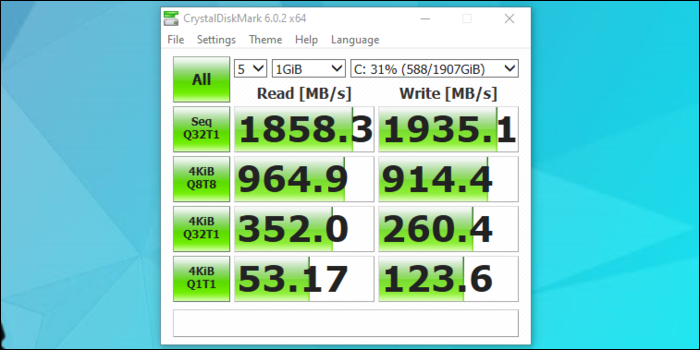

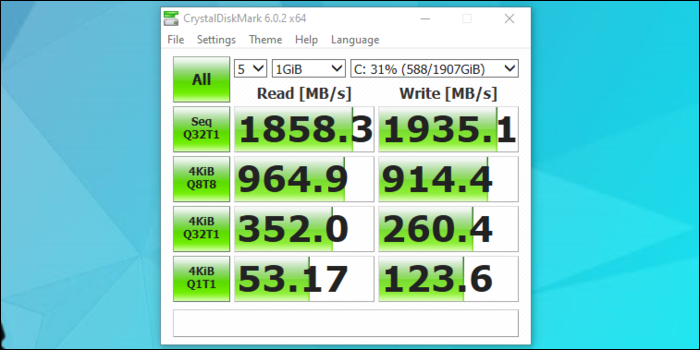

AWS lists and measures SSD speed through I / O operations per second (IOPS). This is largely just a measure of the device's 4K random read and write speed..

SSDs perform differently under different workloads, so there are some alternatives to measure how fast they are. The first is the sequential read and write speed, which measures how fast they are to read a large file from disk. Speed does matter, especially when working with big data, but this is the ideal scenario and, In the real world, SSDs often have to extract data from multiple locations at the same time.

RELATED: How to test the disk speed and RAM of your Linux server

A better metric is random performance. This benchmark reads and writes files in chunks the size of 4096 bytes at random locations, hence the name “4K Random”. More accurately mimics the real-world load the SSD may face.

Random landmarks may vary based on tail depth, a measure of how much the SSD has to process right now. When querying the SSD for a bunch of files, the depth of the tail will be high, which accelerates performance. But the benchmark performance is measured at the depth of the queue 1, which seems to be what AWS measures on their SSDs.

IOPS is a measure of how many actual operations are taking place. The formula to find IOPS from MB / yes it is:

IOPS = (MBps / KB Per Operation) * 1024

And how we read 4 KB at the same time, the formula becomes:

IOPS = MBps * 256

The desktop SSD in the screenshot above would have more than 13,000 IOPS, which is pretty good for a NVME SSD of 2 TB.

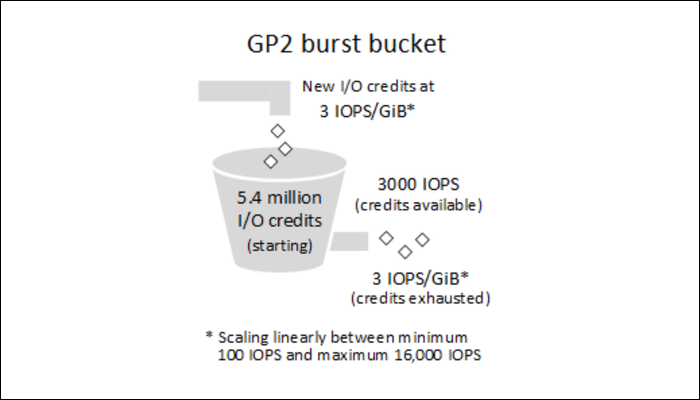

The explosive cube model

The main thing that complicates AWS EBS volumes is burst performance. This works in much the same way as how T2 instances work / T3: when disk is idle, accumulate E credits / S at a rate determined by the size of the volume.

These credits go to a “Deposit”, that accumulates them up to a maximum of 5,4 millions, enough to achieve maximum performance during 30 minutes. Bucket starts full to allow fast application startup and instance startup.

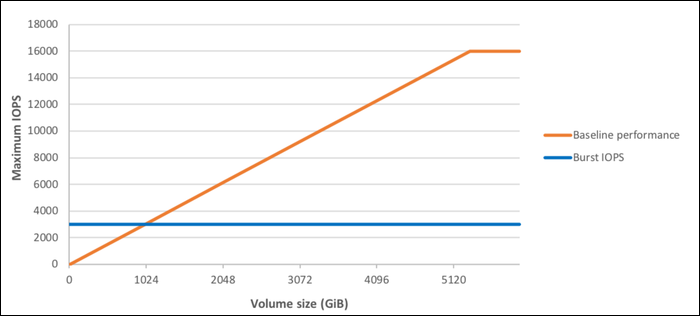

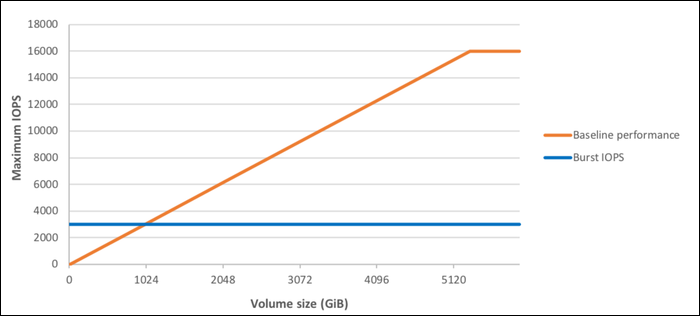

Credits run out of deposit to exhaust performance. gp2 has a maximum performance of 3.000 IOPS, so it can only consume 3.000 per second.

Volumes earn IO credits at a rate of 3 per GB per second. Which means that if you have a volume greater than 1 TB, your tank is always full and you don't have to worry about burst performance. Anything less than that and will be limited to the benchmark performance based on the number of credits you earn.

If you need more sustained performance, you can rent a larger volume or use a volume of provisioned IOPS (io1). Although these are more expensive per GB, enable you to buy IOPS outright. You can buy between 100 and 64 000 IOPS, at a rate of $ 0.065 per provisioned IOPS. This is only truly profitable if you want more than 3000 IOPS. For anything below that, effectively pay double the price for volume. As an example, if you need a volume of 3000 IOPS de 64 GB, you can simply provision a volume of 1 TB gp2 volume at half price. But, if you want the extra speed, can pay it.

Hard drive performance (st1 and sc1)

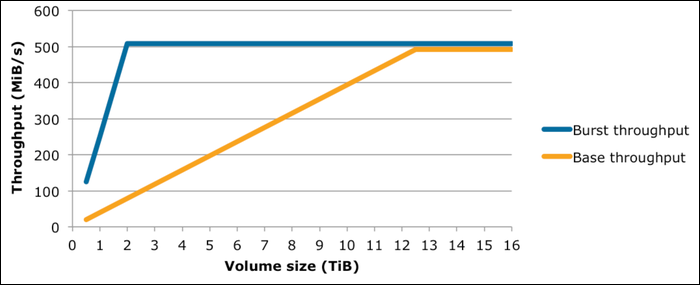

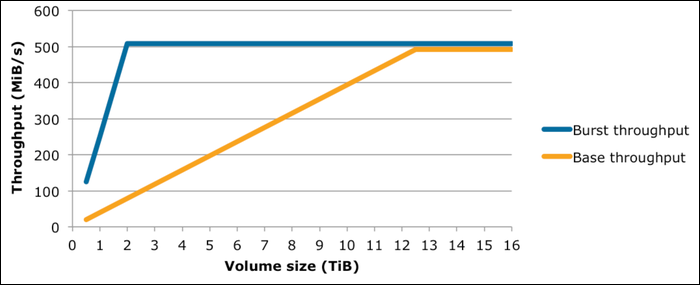

AWS hard drive-based EBS volumes also use a burst repository model, but hard drives work a little differently than SSDs, so it is not measured in IOPS. Because a hard disk uses a rotating disk head, read and write speeds will be fixed. Doing random reads and writes will slow this down significantly (one of the main disadvantages of hard drives). AWS uses sequential read speeds here.

For st1, base speed increases by 40 MiB / s for TB, starting in 20 for the minimum volume size of 500 GB.

Burst speed increases by 250 MiB / s for TB, up to a maximum of 500 MiB / s. For volumes greater than 12 TB, can achieve the maximum speed the 100% weather. Anything less, and you will be limited by your exploding credit balance.

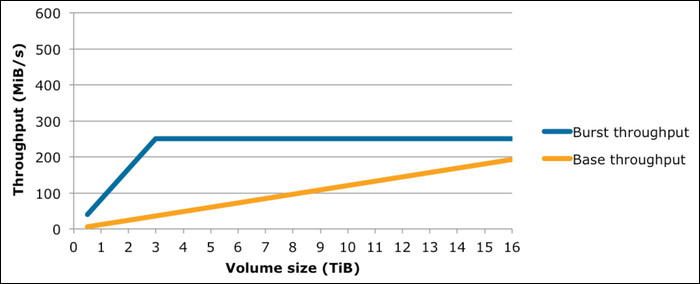

For sc1, base speed increases by 12 MiB / s for TB, starting in 6 for the minimum volume size of 500 GB. It makes it much slower and it will never reach the 100% of your burst ability (but it's cheaper).

The burst speed is also limited and increases by 80 MiB / s for TB, up to a maximum of 250 MiB / s. This equates to approximately 8,000 IOPS, but again, this is probably the sequential speed, and you won't see such high random speeds on any hard drive.

How to find the velocity of your puck in the real world

You could use a tool like dd Despite this, to measure sequential write speed, this does not overload the disk enough to be useful and is not indicative of any actual use case.

To get something better, you must install a disk benchmarking tool called fio from your distribution's package manager:

sudo apt-get install fio

After, run it with the following command:

fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=random_read_write.fio --bs=4k --iodepth=64 --size=250M --readwrite=randrw --rwmixread=80

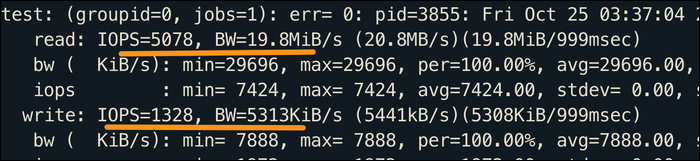

It will create a file of 250 MB and will perform random read and write tests at a ratio of 80% of readings and 20% of deeds, giving you a much more accurate view of your drive's actual performance.

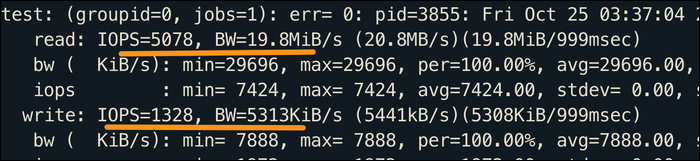

A quick test with a file of 25 MB shows the benefits of the AWS burst warehouse model. The gp2 volume can explode at a fast speed for a while to handle the transfer smoothly. With such a small size, the SSD can effectively exceed the limit of 3000 IOPS, but only for a second.

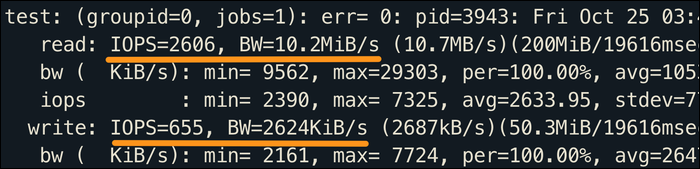

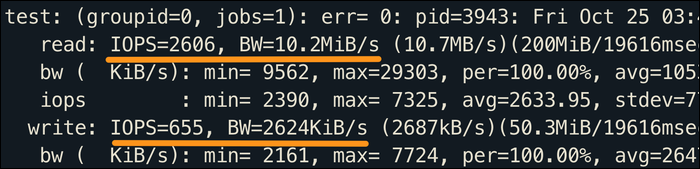

A longer test with a file of 250 MB gives a better view of how the SSD will perform under higher loads. In this circumstance, the test takes more than a second, so speed is limited by IOPS burst rate, arriving to 2600 IOPS.

Of course, if we allowed this test to run for more than 30 minutes, gp2 volume would run out of credits and slow down to only 24 IOPS for a volume of 8 GB. But you probably won't find loads that use the 100% of his album and, if it does, you can always use a larger disk with guaranteed performance or provision IOPS directly.